What does it mean to augment objects, both technically and conceptually ?

This week Rui brought to focus up a few thinking points as well as walked us through a vuforia tutorial. Class began thinking about the philosophy of animism as a powerful approach to thinking about object augmentation.

“Animism is the belief that objects, places and creatures all possess a distinct spiritual essence. Potentially animism perceives all things — animals, rocks, rivers, weather systems, human handiwork, and perhaps even words as animated and alive (from wiki)”.

Also thinking about developmental psychology theorist Piaget and how kids are egocentric in that for a set amount of years tend to only have themselves as a reference, as a way they experience the world, in and in that thinking embody objects as a relational exercise. As an example how sometimes instead of we got lost we say the car got lost when really it was a combination of us, our gps etc.

Rui challenged us to move beyond thinking about projection, past the hologram assistant.

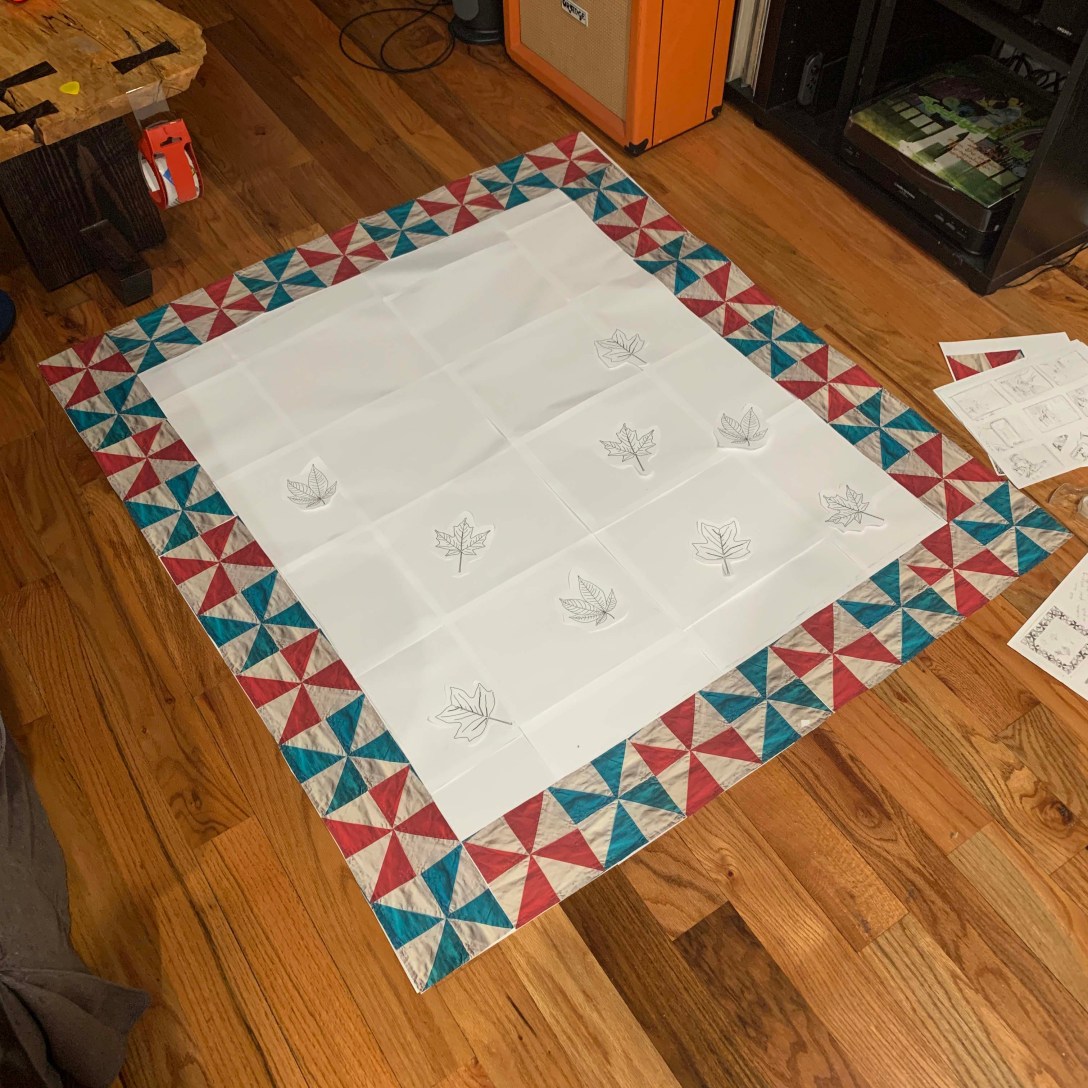

He also talked about how to augment objects as an interface. How to extend the current tool and its current functionality? Can a waterbottle also tell you about the amount of water drunk over the day? How to invoke, look at the form and materiality of things and how to blend its form into the ar space / more naturally tied

He then brought up to the sensation of Pareidolia , seeing faces in everyday objects. How the brain reads for easy patterns sometimes and fills in the dots. A quick assesment as a signal to noice mechanic, reaching conclusions that aren’t always correct like an outlet being a face. Or Rui’s parallel example of hearing Portugese vowel sounds when listening to Russian. Think also about how computer vision / facial recognition works too.

Then we walked through a bunch of fun examples of augmenting objects.

Suwappu – Berg London

Garden Friends – Nicole He

Tape Drawing – Bill Buxton

https://www.billbuxton.com/tapeDrawing.htm

Smarter Objects

- https://dspace.mit.edu/handle/1721.1/91844

- https://www.core77.com/posts/24829/mit-media-lab-fluid-interfaces-groups-smarter-objects-interface-design-24829

Invoked computing

- http://www.k2.t.u-tokyo.ac.jp/perception/invokedComputing/

- https://www.wired.com/2011/11/augmented-reality-university-of-tokyo-invoked-computing/

MARIO

Paper Cubes – Anna Fusté, Judth Amores

InForm

when objects dream – ECAL

HW for next week:

- Eyes on

Pls read at least one of the following:- Developing Augmented Objects: A Process Perspective

- Bricks: Laying the Foundations for Graspable User Interfaces

- Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms

Extra reading: Radical Atoms

it is a nice pairing with Ivan Sutherland’s ‘The Ultimate Display’ - Hands on

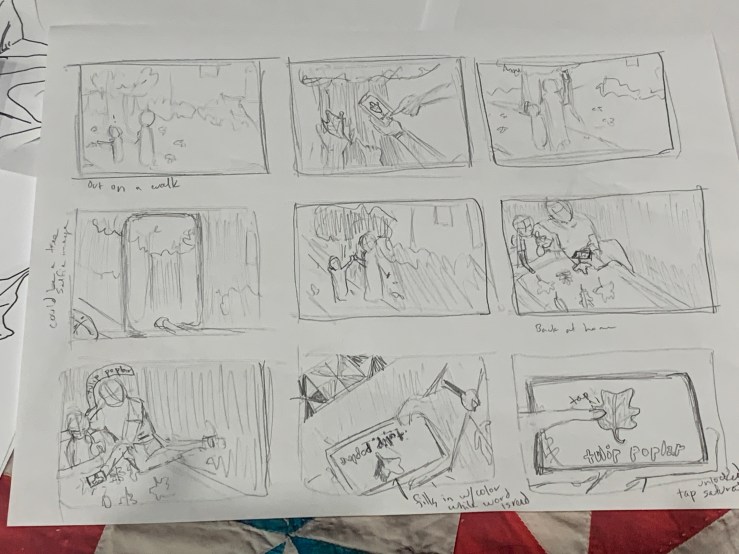

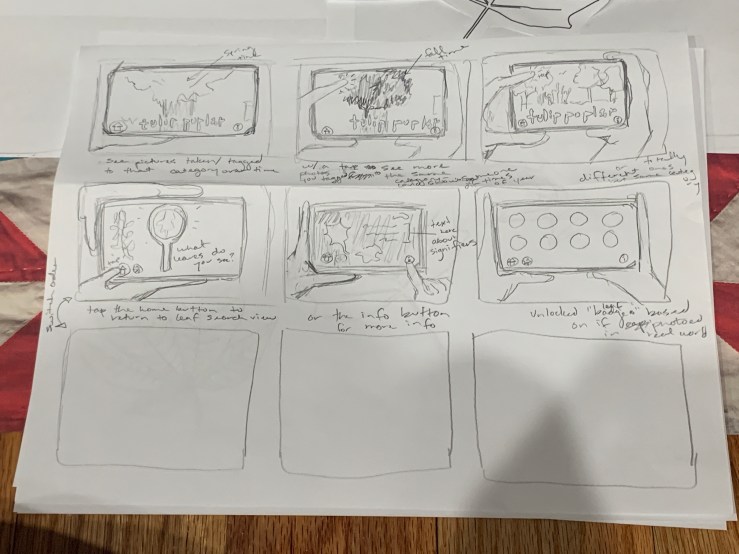

Write a brief paragraph about your idea on augmenting an object.

This should be initial concept for next week’s assignment.

Be clear about your idea, inspirations and potential execution plan.

- Brain on

List potential input (sensory) and output( feedback) modes that you can leverage in the real world – translate these into technical possibilities using current technology (you can use your mobile device but feel free to bring any other technology or platform (Arduino? Etc..) that you can implement.

Choose on elf each (input, output) and create a simple experience to show off their properties but, also, affordances and constraints.