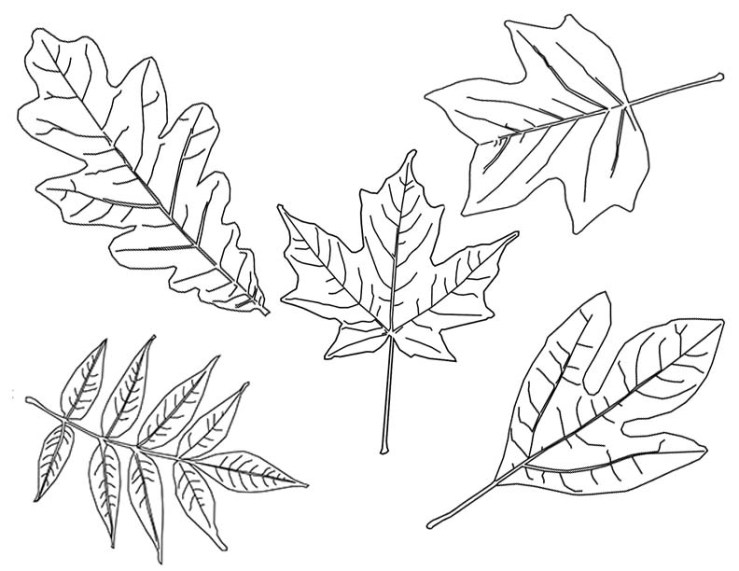

Ashley and I kept working alongside on our textile projects. Ashley continued weaving and I worked on creating smaller image targets for a soft mvp quilt. I decided to hold off on the bottlebrush buckeye and think of another for the future.

Depending on ability to build out collections digitally, may only focus on 1-3 species for first stages, but for now drew out space for 6, 5 drawn and 1 held for a new species. I layered the images in photoshop to make one printable stencil. Tracing on the muslin with the image underneath, first in pencil to mark/ brainstorm positioning, and then after all after positioned, filled in with sharpie.